How do audio files work?

To better understand how audio files work, let’s look at some basics about how sound works in general.

Starting with a vibration

Every sound is created through vibrations called sound waves. We typically hear them though air conductivity (waves traveling through air and through our ear canals), but we can also hear them through solid materials and through bone conductivity (solid vibrations against skull to ear bones). In fact, sound often travels more efficiently through solids than through air. For example, have you ever noticed that sounds are much louder (albeit less distinct) under water? If you ever wondered why people put their ears against a door to ‘hear through it,’ this is why. Also, if you think about how loud brushing your teeth sounds when you are doing it vs. someone else doing it, it is because the the bones in your head conduct the sound better than the air waves.

Examining the Waveform

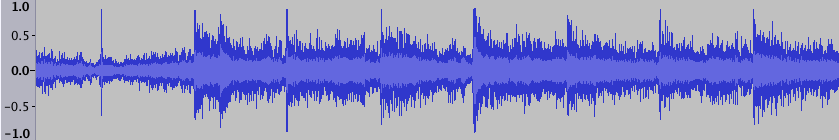

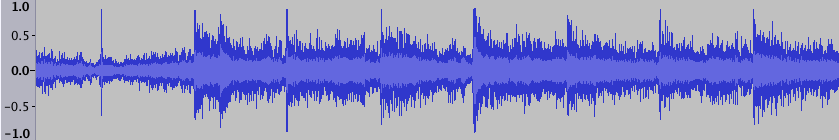

Sound waves can be analyzed through a visual representation called a waveform. Any time you edit sound or look at it on a timeline when editing in a digital application, you will likely be provided with a waveform that gives you visual information about what is happening in the sound file.

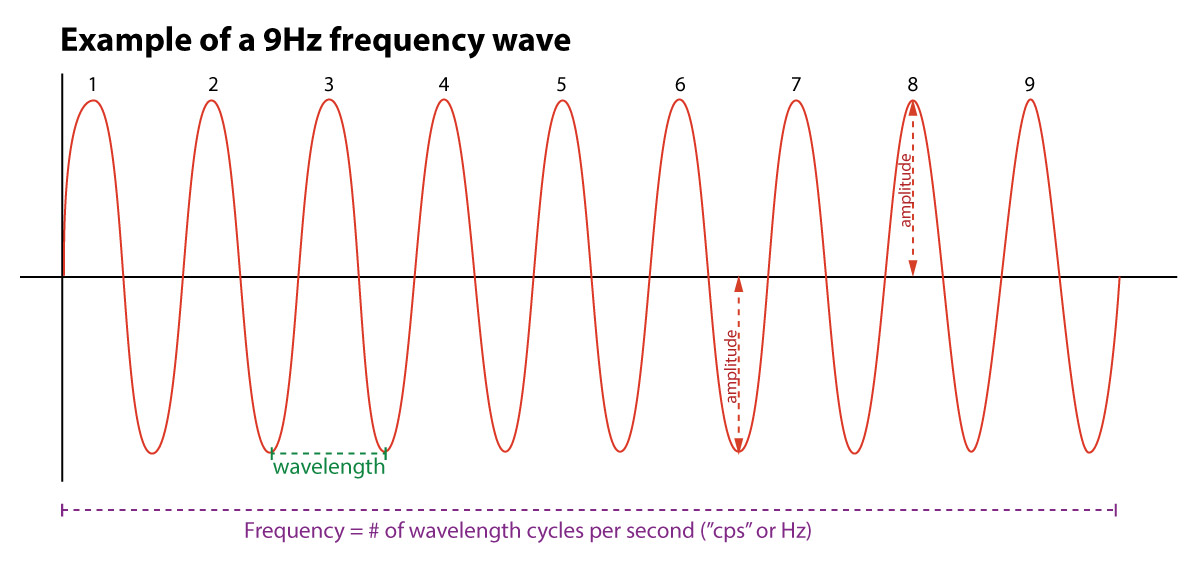

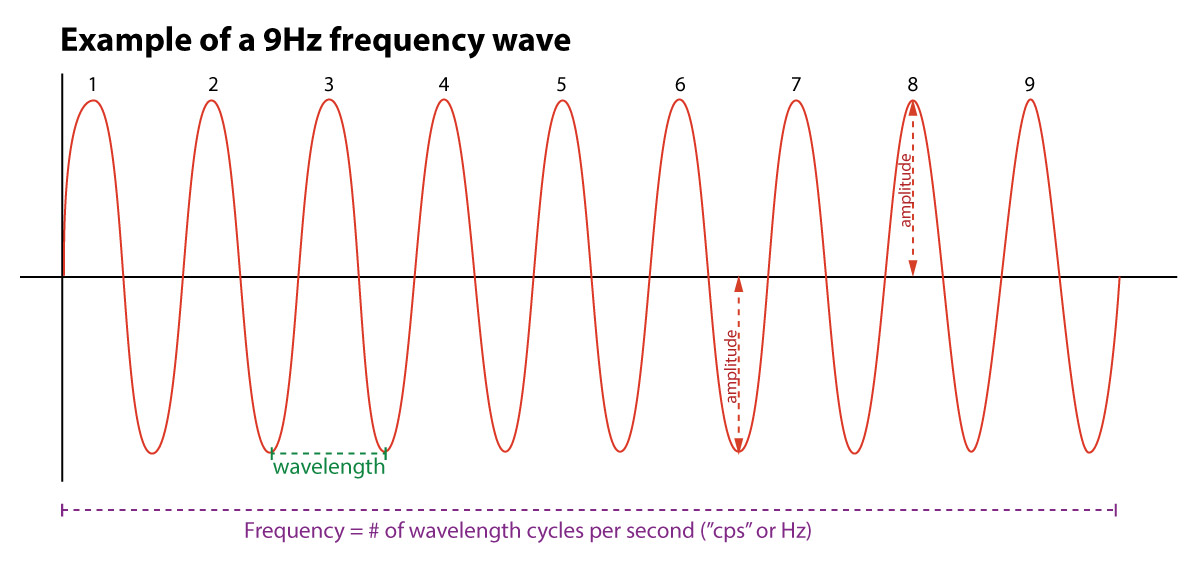

To understand what is happening in a waveform, let’s look at wavelength, frequency, and amplitude.

- wavelength

- the distance between one crest to the next crest in a sound wave.

- frequency

- Frequency is measure of complete wavelength cycles in a second (cycles per second, or ‘cps’). The name of this unit of measure is more commonly called hertz (Hz), named after the scientist who discovered frequency. You look at frequency horizontally across a waveform.

- pitch

- The perceived ‘pitch’ of a sound is how animals interpret frequency between the physical apparatus of ears to the interpretive brain. They are not the same thing, as is often assumed.

- amplitude

- Amplitude is the measure of positive or negative change in atmospheric pressure caused by sound waves. It can be measured in the amount of force applied over an area. For instance, striking percussion harder creates a louder sound. Low amplitude sounds are quiet, while high amplitude sounds are loud. Think of an “amplifier” that is used to increase the volume of a musical instrument. It increases the intensity of sound using energy to increase the oscillations in electric current.

Visual Aids

Compare to:

Notice how both examples above show the same amount of time duration on the timeline: 1 second. The 4Hz wave has 4 wavelengths per second, where the 9Hz wave has 9 wavelengths per second.

Digital Sound Terms

- sample size

- Measures the amplitude or strength of the signal. This is the vertical resolution of the scan of the signal. The sample rate is often measured in bits and determines “bit depth.” Typical CD-quality audio is recorded at a 16-bit sample size, which is 65,536 values or 216 power.

- sample rate

- The number of samples of sound recorded per second of time. This is the horizontal resolution of the sound over time. Typical CD-quality audio is 44,100 (44kHz) samples per second.

- bit rate

- The sample size and the sample rate together make up the unit of measure known as the “bit rate.” This total amount of digitized information is usually measured in bits per second. It can be thought of as:

-

…how much data per second is required to transmit the file, which can then be translated into how big the file is.

- -excellent article on Teakheadz.com

Audacity Software Tutorial

Audacity is a great free sound editor available on Mac, PC, and Linux platforms. It is very intuitive and easy to use. To read m4a files recorded

How do audio files work?

To better understand how audio files work, let’s look at some basics about how sound works in general.

Starting with a vibration

Every sound is created through vibrations called sound waves. We typically hear them though air conductivity (waves traveling through air and through our ear canals), but we can also hear them through solid materials and through bone conductivity (solid vibrations against skull to ear bones). In fact, sound often travels more efficiently through solids than through air. For example, have you ever noticed that sounds are much louder (albeit less distinct) under water? If you ever wondered why people put their ears against a door to ‘hear through it,’ this is why. Also, if you think about how loud brushing your teeth sounds when you are doing it vs. someone else doing it, it is because the the bones in your head conduct the sound better than the air waves.

Examining the Waveform

Sound waves can be analyzed through a visual representation called a waveform. Any time you edit sound or look at it on a timeline when editing in a digital application, you will likely be provided with a waveform that gives you visual information about what is happening in the sound file.

To understand what is happening in a waveform, let’s look at wavelength, frequency, and amplitude.

- wavelength

- the distance between one crest to the next crest in a sound wave.

- frequency

- Frequency is measure of complete wavelength cycles in a second (cycles per second, or ‘cps’). The name of this unit of measure is more commonly called hertz (Hz), named after the scientist who discovered frequency. You look at frequency horizontally across a waveform.

- pitch

- The perceived ‘pitch’ of a sound is how animals interpret frequency between the physical apparatus of ears to the interpretive brain. They are not the same thing, as is often assumed.

- amplitude

- Amplitude is the measure of positive or negative change in atmospheric pressure caused by sound waves. It can be measured in the amount of force applied over an area. For instance, striking percussion harder creates a louder sound. Low amplitude sounds are quiet, while high amplitude sounds are loud. Think of an “amplifier” that is used to increase the volume of a musical instrument. It increases the intensity of sound using energy to increase the oscillations in electric current.

Visual Aids

Compare to:

Notice how both examples above show the same amount of time duration on the timeline: 1 second. The 4Hz wave has 4 wavelengths per second, where the 9Hz wave has 9 wavelengths per second.

Digital Sound Terms

- sample size

- Measures the amplitude or strength of the signal. This is the vertical resolution of the scan of the signal. The sample rate is often measured in bits and determines “bit depth.” Typical CD-quality audio is recorded at a 16-bit sample size, which is 65,536 values or 216 power.

- sample rate

- The number of samples of sound recorded per second of time. This is the horizontal resolution of the sound over time. Typical CD-quality audio is 44,100 (44kHz) samples per second.

- bit rate

- The sample size and the sample rate together make up the unit of measure known as the “bit rate.” This total amount of digitized information is usually measured in bits per second. It can be thought of as:

-

…how much data per second is required to transmit the file, which can then be translated into how big the file is.

- -excellent article on Teakheadz.com

Audacity Software Tutorial

Audacity is a great free sound editor available on Mac, PC, and Linux platforms. It is very intuitive and easy to use. To read m4a files recorded by a variety of devices (including iPhones), you need the ffmpeg plugin. The ffmpeg extensions can be downloaded from our Canvas class OR from the source: https://lame.buanzo.org/.